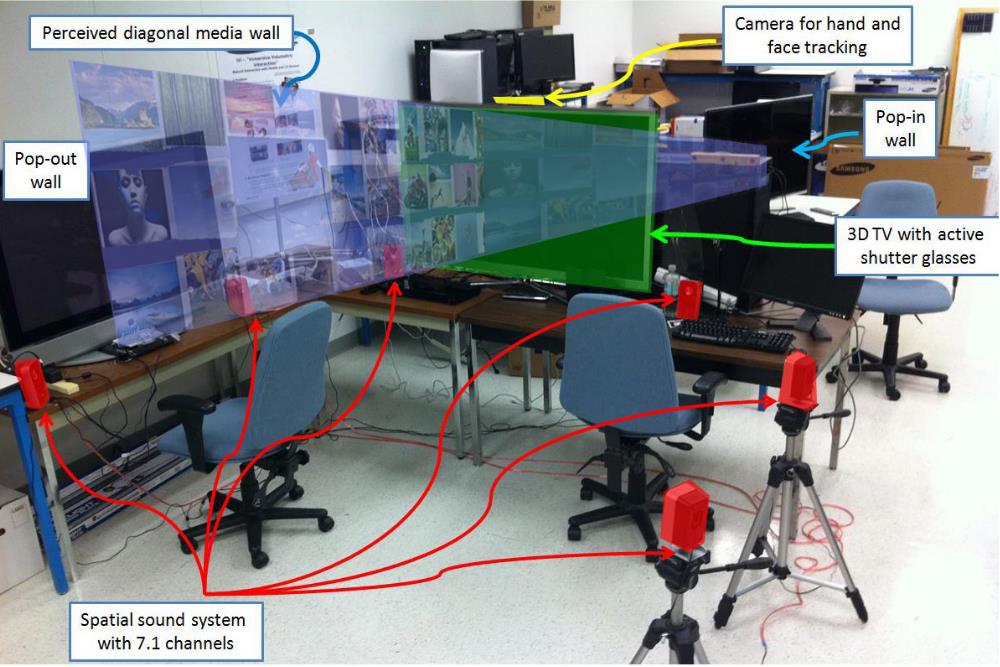

System setup, with perceived media wall

This project demonstrates new media browsing methods with direct bare hand manipulation in a 3D space on a large stereoscopic display (e.g., 3D TV) with 3D spatial sound. We developed a prototype on an ARM-based embedded Linux platform with OpenGL ES (visual rendering), OpenAL (spatial audio rendering), and ARToolKit (for hand tracking).

The main contribution was to create multiple gesture interaction methods in a 3D spatial setting, and implement these interaction methods in a working prototype that includes remote spatial gestures, stereoscopic image rendering, and spatial sound rendering.

Project team in Samsung R&D included Seung Wook Kim, Stefan Marti and me.

Video

Download Video:

MP4,

WebM,

Ogg

HTML5 Video Player by VideoJS

Demonstration of several proposed interaction techniques.